LIVE¶

Table of Contents

Learning Outcomes¶

- Understand encoder setup and requirements

- Know how to manage a publishing point

- Know how to troubleshoot

- Understand high-availability

- Know various deployment options, up- and downsides

Use Cases¶

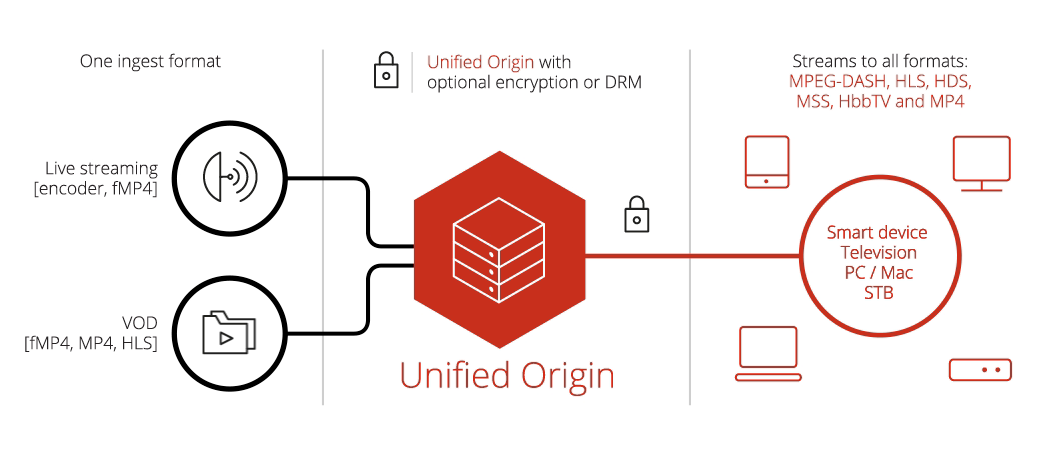

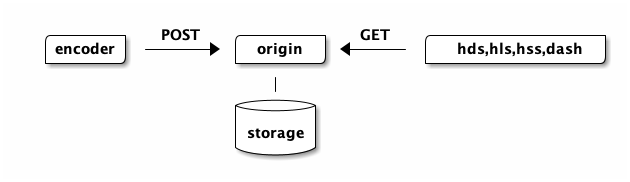

In a live setup, the encoder pushes a livestream to a publishing point over HTTP, using POST to a so-called publishing point on the webserver, the origin.

The origin supports the same output formats as with VOD. The input must be fragmented MP4 or F4M. HLS, RTMP or ATS input are not supported for LIVE.

The encoder should push the livestream in fragment lengths of around two seconds. The encoder generates all audio/video tracks and bundles them into one fragmented MP4 bitstream, or puts each track into an individual fragmented MP4 bitstream and posts all of the streams over separate HTTP connections. This and other requirements are described in the ‘Live Media and Metadata Ingest Protocol’. [1]

After the origin has started to ingest a livestream, the publishing point (e.g. the ‘channel1’ directory) will contain the files listed below: the server manifest (.isml), a SQLite database (.db3) containing timeline information and typically several fragmented MP4 files (.ismv) containing the media data:

channel1/

channel1.db3

channel1.isml

*.ismv

Options Schematically

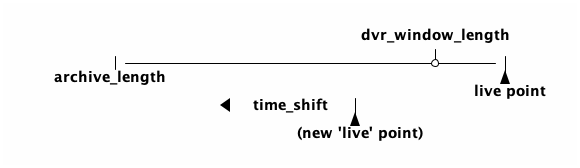

The below diagram outlines how available options relate to the following use cases: [2]

- ‘archive_segment_length’ sets the length of the segments that exist between live point and archive end

- ‘archive_length’ sets the total length of the archive

- ‘archiving’ turns archiving on or off (without archiving only two segments

- are kept on disk)

- ‘time_shift’ offsets the live point (and DVR window) back in time within the ‘archive_length’

Attention

If you do not specify ‘archiving’, ‘archive_length’ and ‘archive_segment_length’ when setting up a live stream, the archive will be infinite! This default behaviour is only useful for event streaming (although we also recommend to explicitly configure the options in such use cases).

Pure Live¶

In ‘Pure Live’ there is no archive and no (or a very small) DVR window. This more or less mimicks a broadcast linear channel.

Pure Live with Archiving¶

If you wish to publish content to your catch-up service ‘archiving’ can be

added. The webserver maintains a ‘rolling buffer’ storing content

within the set --archive-length

DVR with Archiving¶

Here previous settings are combined: an archive is maintained and a specific length is set for the DVR window, allowing the viewer to rewind back in time. As the content is archived catch-up clips can be created from the archive.

Events¶

Publishing points can be re-used by adding an EventID to the publishing point url. This creates separate ‘archives’ for each event while maintaining the same play-out URL. Typically this can be selected in the encoder UI. The URL is formatted as follows:

http(s)://<domain>/<path>/<ChannelName>.isml/Events(<EventID>)/Streams(<StreamID>)

Restart and Catchup TV¶

Using the restart and catch-up options you can restrict where a viewer can navigate to in time or provide a ‘restart’ or ‘start over’ button. This is achieved using a query parameter with a timestamp or a time range to the URL.

Scenarios and manifest types:

- Passing only a start time, the Origin returns a LIVE manifest, but with a specific DVR window allowing rewinding to the beginning of the program.

- Passing a start time and end time in the future, the Origin returns a manifest allowing rewinding to the beginning of the program, but automatically ending at the given end time.

- Passing a start time and end time in the past, the Origin returns a VOD manifest for the program.

Playout Control¶

All the features specified in ‘player URLs’ - ‘limiting bandwidth use’,’using dynamic track selection’ or ‘select variant stream’ - available to HLS clients are available for LIVE.

Specific live options such as ‘time shift’ (see above) are also present as is using different manifests for playout control.

Subtitles¶

As for VOD, the origin supports subtitles and ingests subtitle samples stored in a fragmented MP4 container. The encoder should POST the subtitles one track per language to the publishing point.

Fragments consist of either VTTCue samples (text/wvtt), XML-based TTML fragments (EBU-TT, SMPTE-TT, DFXP or CFF-TT) of ISMC1. CEA-608 or CEA-708 closed captions may also be embedded in the AVC video stream.

Subtitle support varies greatly from player to player, support for more current playout formats like MPEG-DASH can vary month to month. Please make sure you are using the latest version of your player and are aware of the limitations of the support.

Timed Metadata, SCTE 35 and Dynamic Ad Insertion (DAI)¶

When streaming Live, metadata can be used to ‘mark’ a certain timestamp in the stream. Such a mark is also called a ‘cue’. Markers are pushed to a publishing point as part of a separate track and are carried in SCTE 35 messages which, like the contents of all other tracks, need to be packaged in fMP4 containers.

SCTE 35 messages can contain any relevant data however we are interested in their ability to cue splice points in a stream.

A splice point is a specific timestamp correspsonding to an IDR frame offering the opportunity to seamlessly switch the livestream between different clips. Splice points can be used to cue:

- (Ad) insertion opportunities

- Start and endpoints of a programs

If the cue to mark a splice point does not correspond with the start of a media segment, IDR frame, in the stream by default, the encoder pushing the livestream needs to insert an additional IDR frame at the timestamp signaled in the cue. If the –splice_media option is enabled, Origin will splice frame accurately and the part of the media segment containing the splice point will be merged with the previous or next segment.

In addition to splicing media segments if necessary, Origin will signal the splice points in the Apple HLS and MPEG DASH client manifests. A third party service can then be used to insert a clip at the splice point to create an ad insertion or ad replacement workflow. [10]

A demo can be found online. [11]

Deployment and Workflows¶

Encoding requirements¶

If a livestream contains multiple bitrates to support Adaptive Bitrate (ABR) streaming, the IDR frames of the video tracks must have identical timestamps to ensure it is possible for the player to switch between them at the start of each new fragment without causing significant degradation to the rendered video.

The fragment length of your encoder output should be such that each fragment or at least every few fragments will contain a full number of audio and video frames (that is: 1.92 fragments for 25fps video with 48KHz audio). For a more detailed overview of how the output of your encoder should be configured, combined with methods to verify your output, please consult our ‘Encoder Requirements’ documentation. [3]

For each of the protocols there are specific, optimal settings. These are outlined in the ‘Recommended Live Settings’ documentation. [4]

REST API¶

The REST API uses the HTTP verbs (POST, GET, PUT, DELETE) to Create, Read, Update and Delete. The request/response body can contain a publishing point server manifest formatted as a XML SMIL file. [5]

The publising point resource URL has the following syntax:

http(s)://<hostname>/<path>/<publishingpoint>.isml

You can use curl to work with SMIL files and create, delete etc.

the publishing point. Note that mp4split can also create a publishing point.

Attention

For the REST API to work a different virtual host and (sub)domain must be used.

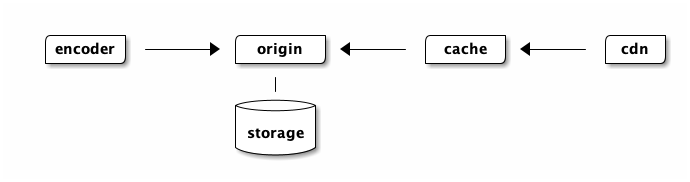

Separation of Ingest and Egress¶

Ingest must proceed uninterrupted. This can be achieved by using caching.

In this case a ‘shield cache’, e.g. Nginx reverse-proxy-caching, ensures a fragment will always be requested only once:

Note

Unfortunately, mounting a publishing point read-only with another origin or over NFS so multiple ‘egress’ origins can read from it to scale egress indepently from ingest does not work. The read side can be overloaded so it starves the write side effectively breaking the publishing point. Caching (potentially with rate-limiting) is how to solve this.

Redundancy¶

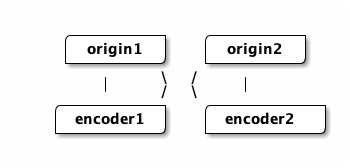

To create a redundant setup a combination of two encoders and two origins could be used, other configurations are also possible:

This setup has the following requirements:

- encoders are time-aligned so they insert the same timestamp into the chunks they post to the origin

- the same options are used when creating the publishing point

- encoder and origin are set to wall-clock in UTC format

Failover¶

Starting a second origin or replacing an origin in a pool requires:

- creating a publishing point on the newly started origin (using the same optionsnas with the other publishing points)

- Synchronizing the archive from the new origin with and existing one.

Since the origin handles duplicate fragments (by simply ignoring any fragments

that are already ingested) and the archive on disk is already in a format that

the origin can ingest, we can simply POST the archived files from the first

origin to the second origin with curl:

#!/bin/bash

curl --data-binary @/var/www/chan1/chan1-33.ismv \

-X POST "http://origin2/chan1.isml/Streams(chan1)"

Caching¶

With live deployments caching is crucial.

The minimum being the local cache setup as described in the VOD section (the Apache and Nginx setup). [6]

The local cache will prevent load on the origin working as a so-called ‘shield cache’. This protects against CDNs requesting the same content multiple times from many POPs at once.

The origin sets the following HTTP headers when live streaming: [7]

- Last-Modified time

- ETag

- Cache-Control and Expires

Note

Please check the referenced documentation as to why these headers are set!

Virtualisation, Cloud, and Container¶

The same options and possibilities apply as with VOD.

Deploying a live channel as a container, i.e. with Docker, has the added benefit that channels are managed individually, the container hosts the webserver which maintains the publishing point. If there is something wrong with a channel it can be inspected or restarted independently from other channels, which is not the case when all channels are deployed on one origin.

Monitoring¶

You can retrieve information about the publishing point with the following URLs. These URLS are virtual extensions to the ‘.isml’, and are handled by the Origin.

| URL to publishing point | Description |

|---|---|

| /state | Returns a SMIL file containing the state of the publishing point and the last update. |

| /archive | Returns a smil containing an overview of the archive (including start and end time). |

The origin has the following states: [8]

| States | Description |

|---|---|

| idle | Encoder has sent no data yet, publishing point is empty. |

| starting | Encoder has sent the first fragment, announcing the stream. |

| started | Publishing point has ingested media fragments from encoder (As Unified Origin is a stateless server, ‘started’ does not imply that fragments are currently being ingested.) |

| stopping | Encoder is stopping and ingest is closing down. (End Of Stream signal (EOS) was received on at least one stream and Unified Origin is waiting to receive EOS on all streams.) |

| stopped | Encoder has stopped, no ingest is happening. EOS was received on all streams. Content that is part of the DVR window can still be viewed and is now VOD instead of Live. |

The ‘/archive’ call can be used to track discontinuities.

Troubleshooting¶

Here are the steps you should take to determine exact issue you may experience:

- Check license key

- Check publishing point state

- Check server log files

- Check encoder settings

- Check release notes

- Check your setup

Please note that restart will stop all connections, incoming and outgoing (‘apachectl -k restart’).

A ‘graceful restart’ will not, outgoing streams will continue. (‘apachectl -k graceful’). However a Live ingest, the encoder POSTing to the publishing point, will stop even with a graceful restart. There is no way to reset Apache and keep the ingest intact (as the commununication between encoder and origin is using a single, long standing POST with chunked transfer encoding, the reset breaks the TCP connection).

Logrotate will also reset all connections you should either turn off logrotate or use rotatelogs. [9]

Hands-On¶

- Create a new publishing point

- Use an encoder to push a livestream to Origin and test playout

- Add Timed Metadata (SCTE 35)

- Look at High Availability, Redundancy and Failover

Create a Publishing Point¶

A publishing point consists of a Live server manifest in a directory of its own, a server manifest alone is not enough.

You can create this directory yourself, let a script do it, or let Unified Origin take care of creating a full publishing point using its RESTful API.

Specifying the name of the manifest as the output on your mp4split

command-line is all that is needed to create a live server manifest with a

default configuration.

However, this default configuration is far from optimal. That’s why we recommend to explicitly specify all options related to archiving, so that you can specify the publishing point to your exact needs.

Explicitly specifying a multiple of your livestream’s fixed GOP length for the

--hls.minimum_fragment_length option, as well as setting the

--hls.client_manifest_version to ‘4’ (see VOD chapter) and enabling

--restart_on_encoder_reconnect is recommended, too.

#!/bin/bash

mp4split -o channel1.isml \

--hls.minimum_fragment_length=144/25 \

--hls.client_manifest_version=4 \

--archiving=1 \

--archive_length=1200 \

--archive_segment_length=300 \

--dvr_window_length=600 \

--restart_on_encoder_reconnect

# The API would run the following lines, but for brevity

# it's not setup for the certification

sudo mkdir -p /var/www/tears-of-steel/live/channel1

sudo mv channel1.isml /var/www/tears-of-steel/live/channel1/

sudo chown -R www-data:www-data /var/www/tears-of-steel/live/channel1/

Once you have run the above commands, use an editor to open the

channel1.isml Live server manifest you created and try to understand the

settings that are part of the default configuration.

Attention

Note the file extension for a Live server manifest (.isml)

differs from that of a VOD server manifest (.ism).

Start a Livestream¶

Push a livestream to the publishing point that you have just created, using ffmpeg.sh:

#!/bin/bash

URL=http://${aws_hostname}/live/channel1/channel1.isml

export FRAME_RATE=25

export GOP_LENGTH=24

export PUB_POINT_URI=${URL}'/Streams(test)'

./ffmpeg.sh

Test playback (remember to use the URL that is specific to your playout format of choice):

curl -v http://${aws_hostname}/live/channel1/channel1.isml/.mpd

It is also possible to use the player.

State¶

Check the state of your publishing point, this should be ‘started’:

#!/bin/bash

curl -v http://${aws_hostname}/live/channel1/channel1.isml/state

Discontinuities¶

Stop the encoder, wait a few seconds and start it again. As the publishing point

was created with --restart-on-encoder-reconnect and wallclock ‘now’, in UTC

format, is used as the start time the ingest resumes when the encoder is

restarted.

However, as the encoder stopped there will be a gap in the timeline, a discontinuity. You can check this with the ‘/archive’ call:

#!/bin/bash

curl -v http://${aws_hostname}/live/channel1/channel1.isml/archive

Timeshift¶

You can create a new publishing point using the --time_shift option or use

it as a query parameter. Start the encoder and open the stream in a player,

you will see it is 30 second behind ‘now’:

#!/bin/bash

curl -v http://${aws_hostname}/live/channel1/channel1.isml/.mpd?time_shift=30

Restart and Catch-Up¶

- If you pass a start time the Origin returns a LIVE manifest with a specific DVR window that allows you to rewind to the beginning of the program.

- If you pass a start time and end time in the future the Origin returns a manifest that allows you to rewind to the beginning of the program, but automatically ends at the given end time.

- If you pass a start time and end time in the past the Origin returns a VOD manifest of the program.

Assuming the current time is 2015-07-28T20:22:00.000 (20:22 on the 28th of July)

| Option | Description |

|---|---|

| t=2015-07-28T20:00:00.000 | Start at the beginning of the eight o’clock news. |

| t=2015-07-28T20:00:00.000-2015-07-28T20:30:00.000 | The complete eight o’clock news program and the weather until it ends at 20:30. |

| t=2015-07-28T20:00:00.000-2015-07-28T20:20:00.000 | The complete eight o’clock news program without the weather as a VOD manifest. |

The above is ideal if you have a 24 hour rolling DVR window, or similar, for Live.

Virtual Clips¶

Request a virtual clip by adding a timestamp to the URL (you have to calculate the timestamps for begin and end time):

#!/bin/bash

curl -v http://${aws_hostname}/live/channel1/channel1.isml/.mpd?t=<begin>-<end>

Query Parameters¶

As with VOD, the output format can be changed by using query parameters. You can try the following:

#!/bin/bash

curl -v http://${aws_hostname}/live/channel1/channel1.isml/.m3u8?hls_client_manifest_version=1

Timed Metadata¶

When streaming Live, metadata can be used to ‘mark’ a certain timestamp in the stream, also called a ‘cue’. These markers or cues are pushed to a publishing point as part of a separate track and are carried in SCTE 35 messages, which like the contents of all other tracks need to be packaged in fMP4 containers.

First create a new publishing point with the additional options

--timed_metadata to enable passthrough of timed metadata and

--splice_media to enable Origin to slice media segments at SCTE 35 markers:

#!/bin/bash

mp4split -o channel2.isml \

--hls.minimum_fragment_length=144/25 \

--hls.client_manifest_version=4 \

--archiving=1 \

--archive_length=1200 \

--archive_segment_length=300 \

--dvr_window_length=600 \

--restart_on_encoder_reconnect \

--timed_metadata \

--splice_media

# The API would run the following lines, but for brevity

# this isn't set up for the certification

sudo mkdir -p /var/www/tears-of-steel/live/channel2

sudo mv channel2.isml /var/www/tears-of-steel/live/channel2

sudo chown -R www-data:www-data /var/www/tears-of-steel/live/channel2

Next we start FFmpeg, pointed at channel2 (see the previous section).

With the live stream running we now can insert metadata. Here, we do this with

push_input_stream (where --avail 24 specifies the interval at which the

markers are inserted):

#!/bin/bash

URL=http://${aws_hostname}/live/channel2/channel2.isml

push_input_stream -u ${URL} --avail 24

The metadata will be present in the media playlist(s), you can check this by getting the master playlist and then a media playlist/variant.

High-availability, Redundancy and Failover¶

There is no hands-on section for this as it would require multiple origins and encoders.

The online documentation does contain a chapter on ‘redundancy and failover’ [12], which has sections on:

- Redundancy

- Dual Ingest Setup (Failover)

- Recreating the Live Archive

- Use of UTC

Configuring the encoder to use UTC for timestamps is extrememly important, this is repeated throughout the documentation). [13] [14] [15]

Footnotes

| [1] | https://tools.ietf.org/html/draft-mekuria-mmediaingest-00 |

| [2] | http://docs.unified-streaming.com/faqs/general/options.html#live-only |

| [3] | http://docs.unified-streaming.com/documentation/live/encoders/configuring-encoders.html |

| [4] | http://docs.unified-streaming.com/documentation/live/recommended-settings.html |

| [5] | http://docs.unified-streaming.com/documentation/live/publishing-points.html#configuration |

| [6] | http://docs.unified-streaming.com/tutorials/caching/index.html |

| [7] | http://docs.unified-streaming.com/documentation/live/webserver.html#http-response-headers |

| [8] | http://docs.unified-streaming.com/documentation/live/publishing-points.html#state |

| [9] | http://httpd.apache.org/docs/2.4/programs/rotatelogs.html |

| [10] | http://docs.external.unified-streaming.com/documentation/live/scte-35.html |

| [11] | http://demo.unified-streaming.com/scte35 |

| [12] | http://docs.unified-streaming.com/documentation/live/advanced-live-options.html |

| [13] | http://docs.unified-streaming.com/documentation/live/advanced-live-options.html#coordinated-universal-time-utc |

| [14] | http://docs.unified-streaming.com/documentation/live/recommended-settings.html#playout-options |

| [15] | http://docs.unified-streaming.com/documentation/live/encoders/configuring-encoders.html#utc-timestamps-should-be-used |